Hi, I’m Michael Ryan and I’m an Incoming PhD student studying Artificial Intelligence at Stanford University. I’m fortunate to be doing NLP research as a member of Dr. Diyi Yang’s SALT Lab! I’m also a core contributor to StanfordNLP/DSPy – the library for programming not prompting LLMs. I’m on the optimizer team for DSPy and I am the co-creator of the DSPy MIPROv2 optimizer.

My research interest is Human-Centered NLP through two directions: LLM personalization for various cultures, languages, and individuals [1] [2] [3]. And leveraging humans for system design and feedback to make better AI systems. [4] Previously I was an undergraduate researcher in Dr. Wei Xu’s NLP X Lab at Georgia Tech and a research intern at Snowflake.

Have a look at my CV, or if you’re in a hurry, check out my resume!

- LLM Personalization

- Human-Centered NLP

- Compound LM Systems

- LM System Optimization

PhD in Computer Science, 202X

Stanford University

MS in Computer Science, 2025

Stanford University

BSc in Computer Science (Intelligence & Systems/Architecture), 2023

Georgia Institute of Technology

Selected Research

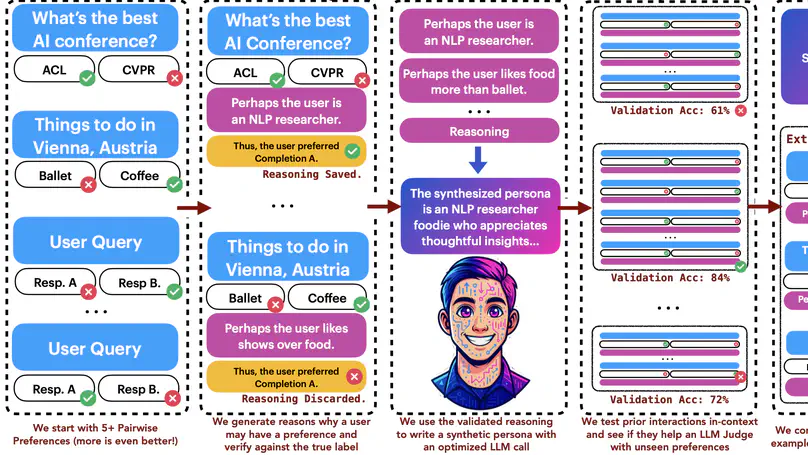

Recent calls for pluralistic alignment of Large Language Models (LLMs) encourage adapting models to diverse user preferences. However, most prior work on personalized reward models heavily rely on additional identity information, such as demographic details or a predefined set of preference categories. To this end, we introduce SynthesizeMe, an approach to inducing synthetic user personas from user interactions for personalized reward modeling. SynthesizeMe first generates and verifies reasoning to explain user preferences, then induces synthetic user personas from that reasoning, and finally filters to informative prior user interactions in order to build personalized prompts for a particular user. We show that using SynthesizeMe induced prompts improves personalized LLM-as-a-judge accuracy by 4.4% on Chatbot Arena. Combining SynthesizeMe derived prompts with a reward model achieves top performance on PersonalRewardBench a new curation of user-stratified interactions with chatbots collected from 854 users of Chatbot Arena and PRISM.

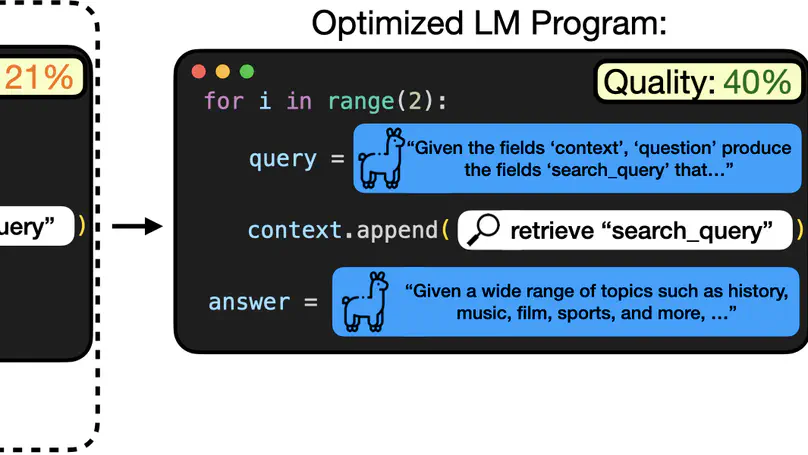

We present MIPROv2, a language model program optimizer which improves both prompts and fewshot demonstrations for multistage language model programs. Our strategies include (i) program- and data-aware techniques for proposing effective instructions, (ii) a stochastic mini-batch evaluation function for learning a surrogate model of our objective, and (iii) a meta-optimization procedure in which we refine how LMs construct proposals over time. MIPRO outperforms baseline optimizers on five of seven diverse multi-stage LM programs using a best-in-class open-source model (Llama-3-8B), by as high as 13% accuracy.

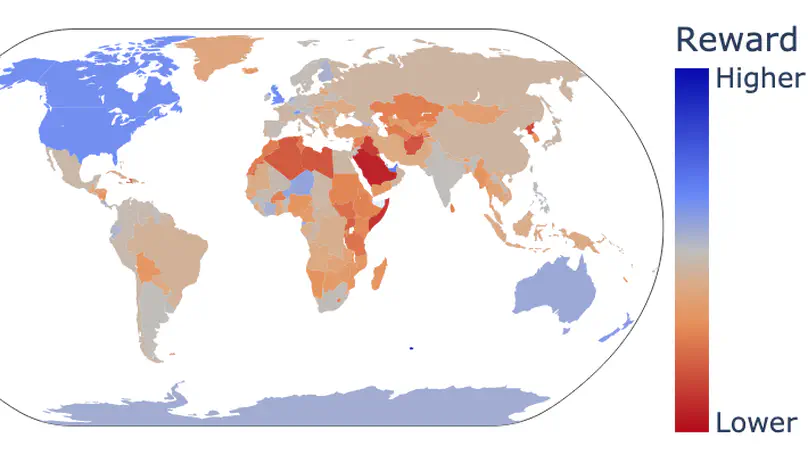

We explore how alignment impacts performance along three axes of global representation, English dialects, multilingualism, and opinions from and about countries worldwide. Our results show that current alignment procedures create disparities between English dialects and global opinions. We find alignment improves capabilities in several languages. We conclude by discussing design decisions that led to these unintended impacts and recommendations for more equitable preference tuning.

Teaching

Work Experience

- Designed and programmed static analysis tool in C++ for identifying security vulnerabilities throughout Windows OS.

- Refactored existing codebase from .NET Framework to .NET Core.

- Ported server-specific architecture to serverless functional units using Azure Durable Functions.

- Implemented end-to-end testing in GoLang for bike, scooter, and moped rentals by building a simulated 3rd party CRUD API.